How Do AI Detectors Work: A Complete Guide

Until recently, content creation has been a human prerogative. Today, however, most content, be it a short text or a picture, is done by Artificial Intelligence (AI).

Not everyone is happy about that, as AI-generated content is often too generic and misses the originality and insights that only human-made content can provide.

This is how AI detectors came to be. They stormed into the digital world and every content creator’s life in the past two years. Now, they are ubiquitous and widely used to detect content made by AI.

How do AI detectors work in theory? Do AI detectors work as intended in real life? Are they always reliable and accurate, or do they make mistakes? We'll attempt to answer these and many other vital questions in the current guide.

AI detectors - what’s the buzz?

Artificial Intelligence (AI) came into the spotlight of public attention with the release of ChatGPT in 2022. However, that’s not the year of AI's birth!

AI was in the IT geeks’ labs for at least two decades (and in their dreams for four decades) prior to the ChatGPT release. In 2022, it merely showed what it was capable of to the public via software-enabled chat functionality.

ChatGPT monthly visits progress over time

Large language models enter the stage

What the world saw in 2022 was the interface between AI and humans - the so-called Large Language Models (LLMs). One example of LLMs was made by Open AI and is called ChatGPT; others are Gemini by Google or Claude by Anthropic. These are advanced AI trained to understand, generate, and manipulate human language.

With fame, however, comes responsibility and resistance. People quickly became addicted to LLMs and what they could do. We began to rely extensively on their content-generating abilities to write human-like texts, do students’ homework, write developers’ code, and even create music and poetry!

The mass use of AI began endangering human work and creativity. We needed to draw a line between machine-made and human content.

However, the human mind finds it challenging to distinguish between genuine human-made and AI-generated content. We desperately needed assistance.

That’s when and why we invented AI detectors.

What is an AI detector?

An AI detector is a system or a tool designed to distinguish AI-generated content, be it a piece of text, audio, video, or music. Advanced AI detectors reverse-engineer a piece of content to see if a machine (AI) would do the same.

What do AI detectors look for exactly? They are sensitively configured to detect patterns and signs that an AI generator typically uses. If a text contains too many generative phrases and expressions, it will likely be classified by an AI checker as machine-generated work.

AI detectors rely on complex statistical analysis to analyze a piece of content. Oftentimes, they calculate probabilities of content being machine-made. For example, a detector would display results as a 75% probability that a given piece of content was made by a human (or machine).

Examples of some popular AI detectors are:

- CopyLeaks AI Detector

- GPTZero

- Zerogpt

- Turnitin AI Detection

- Originality AI

- Winston AI detector

- QuillBot

There are many others, literally hundreds of them online. New AI detectors are popping up here and there like mushrooms after the rain each month.

The cause - the proliferation of AI assistants

Source: Freepik

AI content-generating tools are immensely popular today because of the speed and ease with which they produce results.

Just think of it: instead of spending hours and days writing a term paper, a student can effortlessly generate one using an AI assistant. That’s cheating, you would say! It is, indeed.

The same goes for marketing content creators, copywriters, journalists, and anyone whose work is associated with writing. However, the possibilities of AI for producing content don’t end here.

Another common application of AI generators today is software development. Programmers extensively rely on advanced AI tools like the latest addition of ChatGPT or Gemini to write code. These tools save them loads of time, and more importantly - they produce good results with no errors whatsoever.

Does it all devalue human work and effort? Absolutely.

Why would someone need an AI detector?

A short answer - to appreciate and protect human-made content.

In academia, using AI tools is a real problem and a plague. How do AI detectors work for essays, you may wonder? Pretty good, actually. Teachers have quickly adopted AI checkers in their daily work and punish those students who try to cheat using AI assistants.

In marketing and journalism, using AI-generated content diminishes human effort and creativity. After all, AI content is far from perfect; it lacks human insights, originality, and creativity.

We need AI detectors to distinguish between low-value AI-generated content and high-vale, original human content.

As of today, AI assistants don’t produce new content; they use various combinations of existing content to produce something general. They are also known to hallucinate too! That’s when they come up with something, e.g., an answer that is either false or invalid. They fake things!

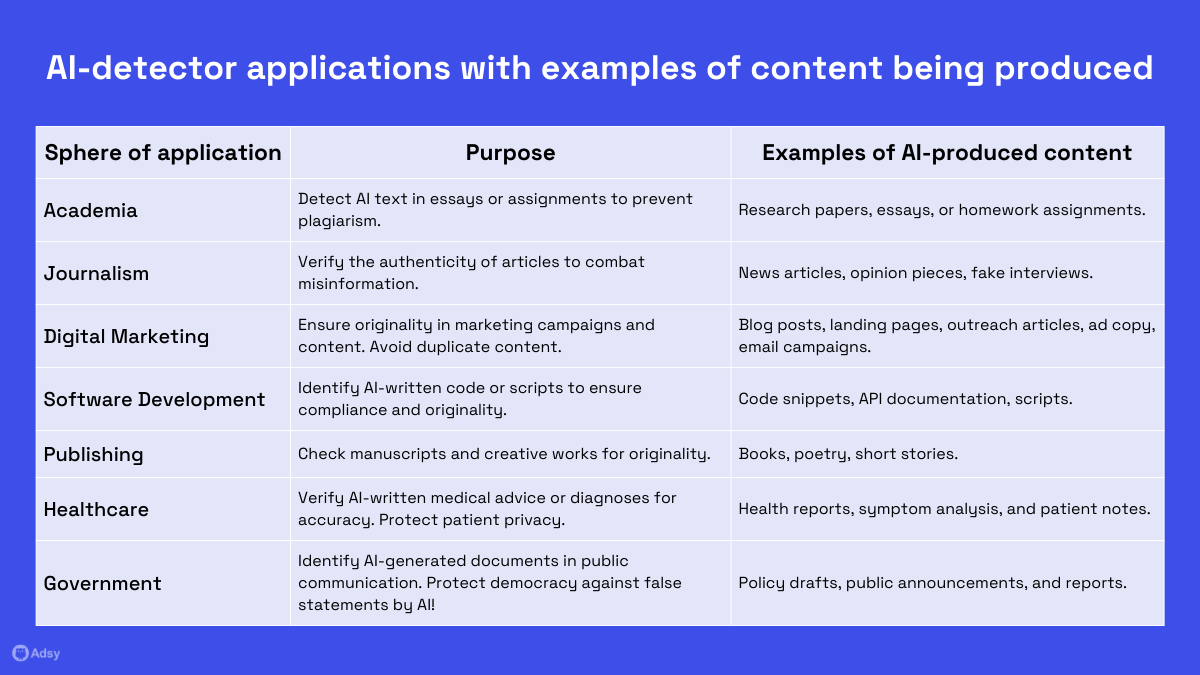

Here is a summary of AI-detector applications with examples of content being produced.

How do AI detectors detect AI?

AI detectors display results as percentages of AI-generated content proportion or the likelihood of a human-made one. They seem pretty “confident” in what they do and how they do it.

However, are they reliable? How do AI detectors work in real-life situations? We’ll look at them under a microscope and see what they can do.

Model training

Model training refers to the process of how modern AI-detectors are trained to distinguish between human and AI-generated content. The training process resembles that of an AI system itself, i.e., it is a machine learning process where the model (AI scanner) is exposed to a large dataset.

For the AI detectors, such datasets consist of two large chunks of data:

- Human-made content data (millions of copies of content created by humans);

- AI-made content data (previous records of AI-generated content).

Loosely speaking, modern AI detectors are pre-trained machines, as they’ve acquired their “expertise” on large datasets created by AI and humans. They require training to perform their detecting work and can do this training on their own, pretty much like a child learns to assemble a Lego game.

Source: Labellerr

Feature analysis

What do AI detectors look for in written content? Once the AI-detector model is subjected to written content, it breaks it into pieces and analyzes them individually. In doing so, it looks for the patterns and features that distinguish AI content from the human one:

- Lexical features: vocabulary choice, word variety, expressions, sentence structure, length, etc.

- Syntactic features: grammar used (e.g., humans can make grammar mistakes), punctuation patterns (humans may alternate between punctuation styles, whereas a machine would stick to the same pattern).

- Stylistic features: writing style and tone, logical flow, coherence, repetition of lexical constructions, etc.

In feature analysis, AI detectors often rely on statistical formulas, such as applying correlations, regressions, and other techniques.

Reverse engineering

Source: Freepik

AI detectors are trained to adapt to specific AI models, e.g., ChatGPT, Claude ai, and Gemini. In other words, they learn from different models to ensure accurate detection. Irrespective of which AI model was used to generate content, the AI detector should be able to detect non-human content.

Each of the existing language models (LLMs) has its unique content characteristics, and an effective AI detector must be able to distinguish the subtlest of differences.

In practice, an AI detector reverse-engineers a piece of content utilizing a specific language model to see whether the model was likely used/not used in creating that content.

Since modern AI models progress exponentially (e.g., Elon Musk even claims that AI gets smarter as fast as 10 times a year), AI detectors have difficulty catching up on their own.

How can AI detectors detect AI if they lose in this race for intelligence?

Humans boost AI detectors’ capabilities by applying the so-called enforced learning, i.e., instructing AI detectors when and what to learn. This is not the most effective approach by far since LLMs utilize neural network architecture to become smarter. Neural networks mimic the way the human brain learns, allowing LLMs to learn on their own.

Stylometric analysis

Stylometric is derived from the word “style.” It involves understanding different stylistic features, such as:

- Syntax complexity;

- Word frequency;

- Tone and the use of idioms and metaphors.

Stylometric analysis helps AI detectors distinguish between human creativity and machine perfectionism or consistency.

For example, a human being would show greater variability in writing, depending on the mood, competence in a given topic, etc. Meanwhile, a machine's style is always consistent and predictable.

Things like overly formal language, repetitive structures, and common patterns signal to an AI detector that this is machine-made content.

On the contrary, anomalies in structures and unique, non-standard key elements (for example, in writing that can be an introduction, main body, and conclusion) are indicative of human making.

Challenges in detecting AI outputs

Do AI detectors work as intended? Can we rely on them? Or do they make mistakes and fail to accurately determine the content’s author? Let’s find out together.

Spoiler: there will be no simple answers here, as things are never simple when the subject is intelligence.

Source: ResearchGate

False positives and false negatives

AI detectors aren’t always accurate. As if acknowledging that, they tend to avoid giving a straightforward answer, such as a text being human-made or entirely AI-generated.

Instead, modern AI detectors provide percentages or likelihoods of content being either of human or machine origin. For example, a given text has a 65% likelihood of being human-written.

Nevertheless, false positives and false negative assessments are very common.

- False positives are when detectors mistakenly classify content as AI-generated.

- False negatives happen when detectors fail to identify AI-generated content.

1. False positives

False positives are common. They happen because AI assistants get disruptively smarter every year, and the detectors’ algorithms fail to adapt. The line between human-made and machine-made content becomes increasingly blurred.

Some common reasons for false positives include:

- Overly formalistic language;

- Clear, structured, and to-the-point language;

- Absence of errors and a perfect grammar;

- Lack of data in the detector’s model about human-made content on a given topic.

False positives frequently occur with technical documentation, legal documents, and business and diplomatic correspondence. Even the US Constitution is sometimes mistaken by AI scanners as machine-made content!

2. False negatives

False negatives are no less common. After all, no AI checker is perfect. They may fail to identify AI-generated content for the following reasons:

- When people use advanced AI models to generate content (since AI detectors rely on human instructions, a relatively slow process, they simply fail to catch up with what the latest AI assistant can do);

- When a deliberately “humanized” style and language is applied to post-processing (i.e., when a human author adds idioms, metaphors, and an excessively emotional tone of voice).

If you deliberately make mistakes or skip the proofreading phase in your writing, you may trick the machine into “believing” your text is 100% human-made, even though some parts were machine-generated.

The cat-and-mouse game: AI vs. detectors

Source: Freepik

The ongoing battle between AI advancements and the ability of AI detectors to stay up to these changes resembles a famous cat-and-mouse game. The cat, an AI model, becomes more intelligent and works at a speed never seen before.

The most modest estimates say that AI models get more intelligent at a rate of four times a year. Moreover, the LLM’s output gets harder and harder to distinguish from the human language.

For example, this is what the latest OpenAI's GPT and Anthropic's Claude models can do:

- Provide nuanced responses as they train on all human knowledge in the world;

- Incorporate randomness into their responses;

- Mimic human emotions and writing styles (informal, casual, cheerful, polite, etc.);

- Add variability to sentence structure;

- Use advanced vocabulary, including metaphors and jokes.

These advancements and tricks make the work of AI detectors harder. Do AI detectors work at all under these constantly changing conditions? Sure, they do, but they continually struggle to keep up with the pace of progress in artificial intelligence.

Some detectors become unjustifiably strict and suspicious when working with AI text. They may confuse even entirely human-made content with an AI.

For instance, the latest addition of Originality AI, a powerful paid AI detector, tends to see AI content in every text.

How effective are AI detectors?

To make things even more complicated, certain AI models today come with the so-called “humanizing” feature. What this feature does is deliberately randomize output to mimic human tone and style.

For example, QuillBot and dozens of other AI models have this feature as standard.

How to spot AI content without a detector

What makes AI text detectable with the naked eye? Can you detect AI content without the help of AI scanners? Actually, you can, provided you are attentive enough to spot the subtle nuances:

- The introduction and conclusion are too generative and vague in a typical AI-generated text. They may contain opening clauses like “In the age of rapid technological change” or “In today’s increasingly digitized world.”

- Repetitive structures, i.e., evenly voluminous paragraphs, sentences, or bullet points.

- Overly dry and formal language, lacking emotions and variability.

- Redundancies and logical gaps, as AI tends to repeat things. Often, the same fact can be mentioned in several paragraphs.

- Lack of nuances. GPT stands for Generative pre-trained transformer, meaning it doesn’t produce anything new and groundbreaking. Instead, it transforms the existing knowledge, often implying generalization.

Unfortunately, AI-generated content is already ubiquitous. Try searching for information on any topic online. The first result you’ll come across will likely be an AI text (even though a fake human author will be attached to it).

Tellingly, most stuff online was made by humans utilizing AI assistants. So, it is a recycling of the existing knowledge, as AI cannot produce anything unique and valuable. At least, it is so when writing this article.

Our soon-to-be reality is when Google and other search engines introduce a filter to their search window, enabling users to find only human-made content. By then, Google’s AI-detecting software must be good enough to detect AI content accurately and quickly.

Conclusion

The abundance of AI assistants with advanced language and visual processing capabilities has completely changed the way content is created. AI-generated texts, videos, music, and even software codes became ubiquitous, devaluing human creativity and innovation.

That’s why we invented AI detectors. They help us distinguish between AI-generated and human-made content.

How do AI detectors work? AI detectors or scanners work by performing complex statistical analysis and reverse engineering content.

What do AI detectors look for? They look for patterns and structures characteristic of machine work. An AI checker then displays results as scores or percentages of probability that a given piece of content was human or AI-made.

The biggest problem is that AI scanners’ results are not always accurate. They make mistakes and may falsely accuse a human author of using an AI tool. Similarly, they often fail to detect AI-made content, e.g., when the content is on a rare topic or when a literary style was applied to mask the work.

We advise using several AI detectors to maximize detection accuracy and minimize the likelihood of errors. Additionally, there are several known techniques to spot AI with the naked eye, so to speak.

All trademarks, logos, images, and materials are the property of their respective rights holders.

They are used solely for informational, analytical, and review purposes in accordance with applicable copyright law.