Synthetic Users in SEO: What They Are and Why They Matter

The word “bot” has become common in the English language, and there is hardly any internet user who hasn’t heard it at least a dozen times in their lifetime. However, a more technical or academic term with the same meaning is “synthetic users, or simulated users”.

In SEO, synthetic users are a hot topic, sparking debate between those who rely on them for testing and those who condemn their manipulative use.

The goal of this post is to explore the impact of simulated users on SEO performance, carefully weigh the benefits and potential risks, understand how to detect them in analytics, and examine their near future, especially in conjunction with AI bots.

What are synthetic users?

Bots or synthetic users are non-humans by definition. But who are they really?

Bots are nothing more than scripts that interact with your site. They can request pages, trigger JavaScript, and complete forms. In analytics, this shows up as synthetic traffic that only mimics human behavior, but anyone good at Google Analytics can easily distinguish a non-human origin of a spike in their website traffic.

Other terms frequently used to denote the same activities include: simulated users, virtual users, emulated agents, artificial visitors, and fake users, to name a few.

It’s critical to understand the two conversations running at once:

- The engineering conversation views simulated sessions as a controlled way to model spikes, measure stability, and validate UX.

- The SEO conversation is about whether fake users are being used to shape behavioral signals and mislead ranking systems.

Not all artificial visitors are the same. Some scripts are built for good reasons: measuring speed, stress-testing a checkout, or checking that a funnel still works after a release. Others are fake users meant to game clicks, inflate dwell time, or create the false impression of popularity.

Here’s what that means in practice:

- Performance testing: Emulated agents hit pages to gauge load and stability.

- UX checks: Simulated sessions walk critical paths to catch breakage.

- SEO manipulation: Bot traffic tries to nudge rankings with fake signals.

- Analytics noise: Artificial activity skews bounce, CTR, and conversions.

The scale is big enough to matter. A recent study by Forbes looked at the impact of AI on bot traffic. The researchers estimate that bots already account for over 50% of all internet traffic, and this number is expected to grow to a whopping 90% by 2030, largely due to the impact of autonomous AI agents.

Deep down the hood, the meaning of “synthetic users” depends on intent. If your aim is resilience and quality, simulated users are a responsible instrument. If your aim is to bend search signals, you’re in the realm of manipulation — and potential penalties by search engines (more about this in the chapters to come).

👉 The bottom line? Simulated users are great for pushing predictable load, verifying user journeys, and catching regressions before customers do.

The same techniques can also produce artificial activity that inflates clicks or dwell time, which is why internet bots have both a neutral and negative sound in SEO circles.

Why simulated users have become a hot topic in SEO

Oftentimes, something new comes up not because we want or envisage it, and work hard to make it happen, but because the technology is there to enable and support it.

A similar situation describes the appearance of internet bots — they are here because several technological trends have collectively created a pressure for something like synthetic agents to come into existence.

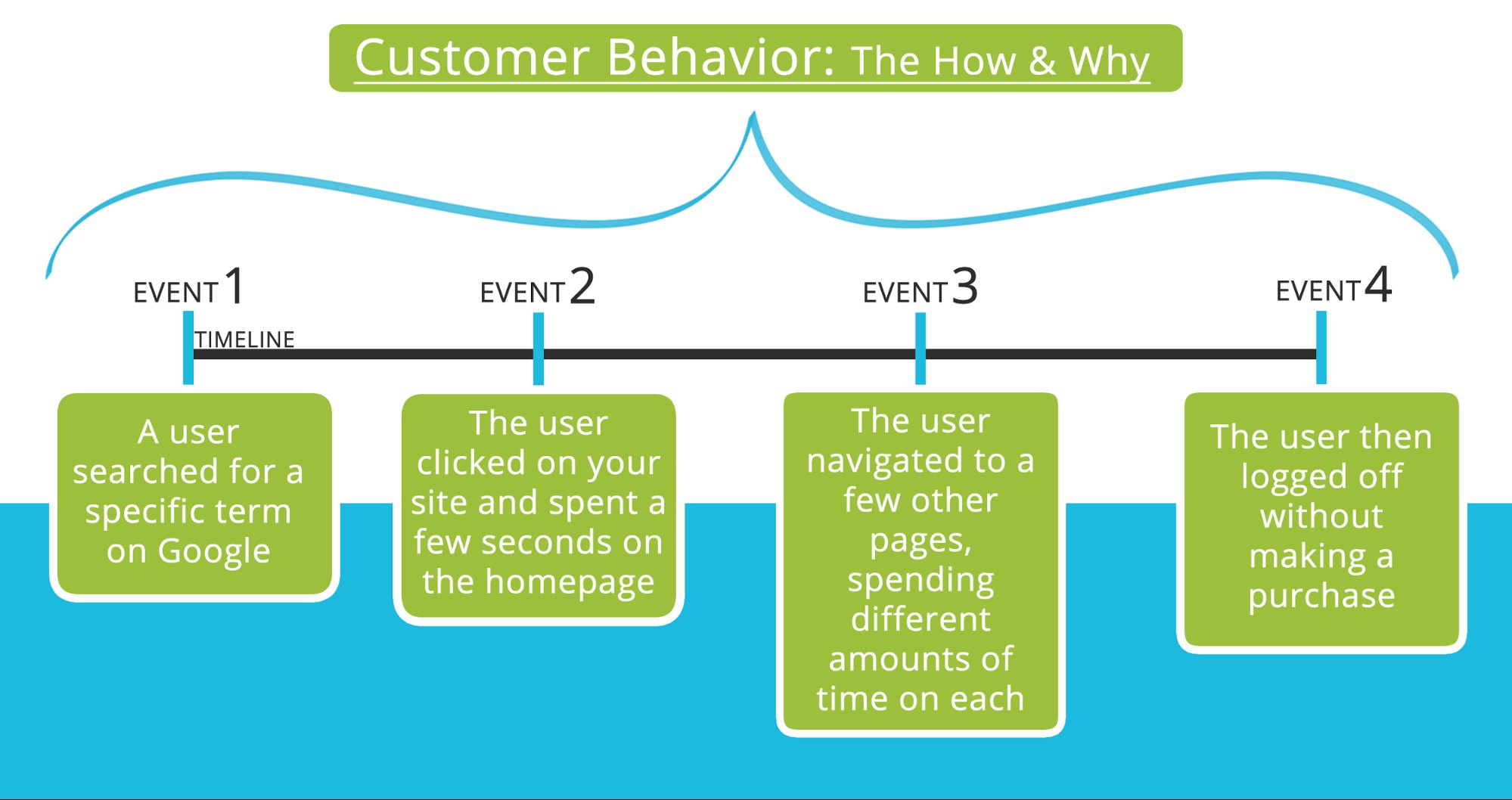

The rise of behavioral signals in search rankings

Ranking systems now care deeply about how people behave, not just what a page says. The story behind a click — what happens next — matters. An effective SEO strategy has to capture this to help websites achieve their goals.

Source: Neilpatel

That shift pulled synthetic users into the spotlight because they can rehearse behavior before launch.

👉 The meaning of this is practical: if your page loads slowly or hides answers, people bail, and search engines notice. Simulated users let you pressure-test the experience without burning trust with real audiences.

Virtual users can help you check page stability, track script timing, and validate journeys across devices. The caution is that they are still code, not people. The key is to treat them like lab instruments, not performance trophies.

Increased reliance on engagement-based metrics

Engagement-based metrics connect design choices to outcomes. Emulated agents give you controlled runs across devices and networks, which is handy when you’re testing risky changes.

Use this checklist as a reference point:

- Attract: Who clicks and why.

- Absorb: Whether visitors stay and read.

- Advance: What steps will they take next?

- Achieve: Which outcomes are reached?

- Adverse: Where things break under load.

Marketers tend to keep simulated sessions out of production KPIs. If they slip in, they blur the meaning of progress and make wins look bigger than they are. Remember, scripts teach; people decide.

Even Google, in its Google Analytics 4, has recently switched from measuring the bounce metric to the engagement framework. The term “engagement” sounds more optimistic and is more convenient to work with for many. Little is left to wonder why synthetic agents were called to simulate human engagement signals and to artificially drive SEO performance.

Growing market for automated SEO tools

Automation has scaled because it saves time and reduces guesswork. Teams can run nightly scripts that click through a journey and alert on regressions. Over time, these runs became as normal as unit tests.

Common tool categories include:

- Load and soak: Long-running sessions to test stability.

- Synthetic monitoring: Scheduled page checks with screenshots.

- UX pathing: Emulated agents that simulate real journeys.

- Change detection: Compare before/after in a controlled run.

Vendors that thrive help teams stage synthetic users without poisoning analytics. Good tools keep test data in test lanes. That way, your public dashboards still reflect people, and your experiments keep their meaning.

Can bot traffic improve your SEO metrics?

This question seems rhetorical at first glance. Since we’ve already discussed how the changed technological landscape and the pressures from new search algorithms have led to the bots boom, it may seem obvious that the SEO gains will follow. However, it’s not that simple, as it may seem, and there are a few important caveats to consider.

Source: Questionpro

Impact on bounce rate and dwell time

If your instrumentation marks a heartbeat as engagement, bots lower bounce. That’s a measurement quirk, not a UX win. A real user may still leave quickly.

Bots inflate dwell time since they wait for the page to finish loading before moving on. The clock continues even when nothing meaningful happens. You need to understand that it’s just time on the page, not attention to the content.

Therefore, be careful not to blend these traces into decision dashboards. Your search performance KPIs should reflect people. Keep synthetic traffic separate, even if the bots’ activity makes a visually pleasing dashboard by inflating your SEO metrics. You may feel pleased to report the good-looking numbers to management, but the real gains are artificial.

How synthetic users influence click-through rates and rankings

Click-through-rate (CTR) scripting manipulates the click pathway. Even though logs may show more clicks to the same result and longer stays, the real ranking impact is noisy and often short-lived. No pain, no gain, and this is so true in the case of bots' impact on CTR.

Here is how bots trick the above-mentioned SEO metrics:

- The bot runs a search for a chosen term.

- It selects the target link from the results.

- It pauses on the page for a short while.

- It does the same thing again and again.

Filters look for uniform loops and unusual ratios. Without follow-up human interest, the pattern fades. Real user activity is what keeps rankings steady, and the modern AI-powered search engines understand that better and better.

Virtual users and backlink signals

It’s hardly a secret that backlink value depends on audience trails. While virtual users can walk the trail, they can’t create it. The true authority signal comes from humans who care.

When referrals spike without secondary actions, smart Google’s systems infer weak quality. The visit exists, the meaning doesn’t. That divides test health from real influence.

SEO pros choose to keep synthetic referrals for quality assessment (QA). At the same time, they anchor authority measurements in human traffic and engagement. That’s how search performance metrics stay trustworthy.

Effect on conversion-related metrics

Bots can pass forms and trigger custom events. That inflates SEO performance metrics if goals are broad, and the team doesn’t care about real KPIs that are tied to business performance. But when it comes to the revenue dashboards, they won’t agree.

Look for these technical fingerprints:

- Uniform dwell: Identical wait times precede goals.

- Burst patterns: Conversions arrive in tight batches.

- Device sameness: The same headless profile repeats.

- Attribution spikes: One channel suddenly “wins.”

If your goal is to assess the real SEO performance, run tests in clearly marked environments, and apply strict rules for what counts as an event. Confirm improvements with human segments, and keep user actions as the ultimate success yardstick.

The two most frequent usage scenarios: ethical vs unethical

When you browse this topic online, you’re most likely to come across two frequent use scenarios, each with its own camp of fierce proponents and opponents.

In short, synthetic users can be used for good and for bad purposes, aka ethical vs unethical SEO. In this chapter, we’ll explore both scenarios and look at their benefits and downsides to see if the debating parties really have a rational argument.

Synthetic users and traffic manipulation: a risky SEO shortcut

When artificial activity simulates humans, you may get nicer charts, but your problems will stay with you. Probably helpful if you want to fool someone (management reporting, stakeholder meetings, etc.), but utterly useless if real progress is your goal.

That’s why the practice is generally regarded as unethical. The user experience doesn’t improve; only the numbers do.

It also hurts experimentation. A test only works when the baseline is real. If you blur it, the next test confirms a story you scripted. You end up with a distorted picture of reality, not reflecting your real pains and challenges. It’s only a vision of progress, not the progress itself.

Here are the known three risks and limitations of synthetic users:

- Data distortion and unreliable insights – Artificial signals inflate search performance KPIs, so teams pick the wrong priorities. Your next test repeats the bias.

- Possible penalties from search engines – Detection models look for uniform loops, unlikely ratios, and shallow follow-ups. Getting flagged hurts, and future test runs may inherit the risk.

- Wasted resources on artificial growth – Money and time flow to scripts instead of content, speed, and design. Each test buys a chart, not a change.

It’s a slippery route to success. Easy to fake progress, but not easy to get rid of this practice. Moreover, once you “earn” a reputation of being a bot cheater in the professional community, discussion forums, and among users, this bad image will stick with you for a long time.

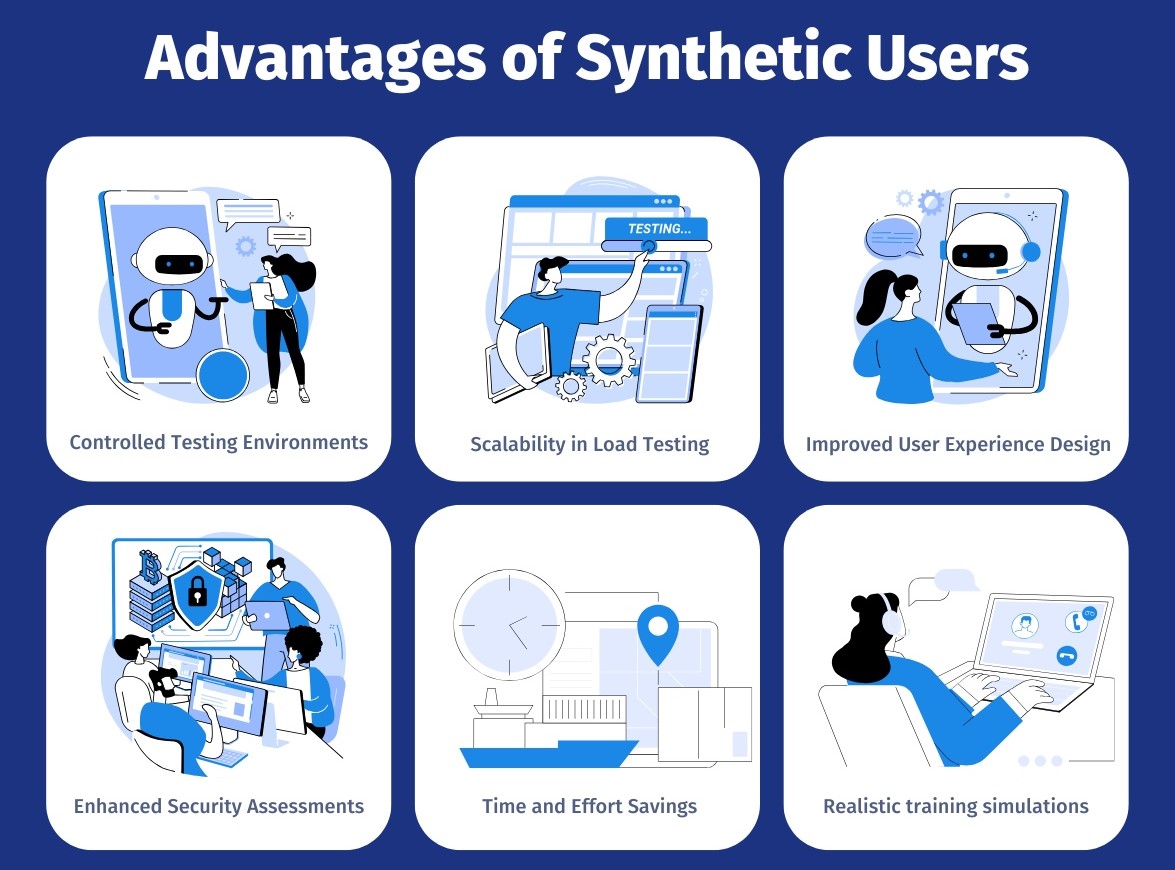

Testing website performance with simulated users

In testing, simulated users are a net positive. They let you test safely, quickly, and repeatedly. They also lower the risk before launch.

Consider these three major benefits of synthetic users for website testing:

- Load testing and performance benchmarking – Stress pages safely and repeat the test on demand. You learn how the system behaves under pressure.

- Identifying weak points in user journeys – Script real paths to find the step that breaks or delays. The test leads directly to a fix.

- Measuring Core Web Vitals under controlled conditions – Keep device and network constant, then rerun the test after a change to confirm better vitals.

With separation in place, your website KPIs stay honest. Test signals help engineering, while people validate outcomes. That’s ethical, practical, and sustainable.

In the end, simulated sessions make great instruments. They save time and money on real-world testing, enabling you to find an optimal setup in a controlled (safe) environment.

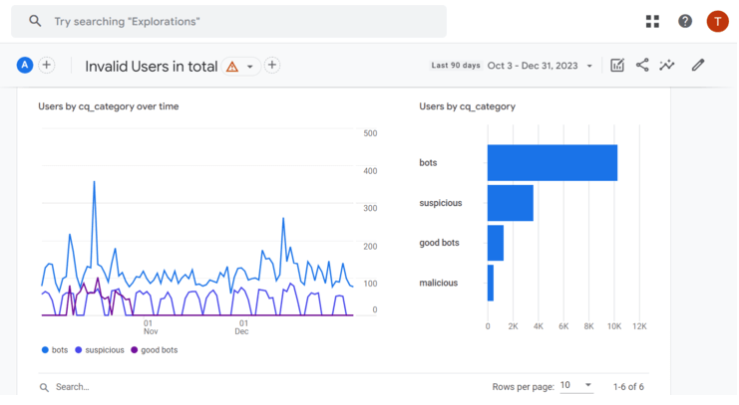

How to detect synthetic users in Google Analytics

The activity generated by synthetic users can be detected with several tools, and even with the naked eye, in case of unusual spikes when no usual reasons can explain what’s happening.

However, most SEO practitioners prefer to use Google Analytics version 4 (GA4), or its paid counterpart GA360. Both tools have ample functionality to detect, track, and analyze simulated sessions in real time.

Source: Advancemetrics

Spotting unusual traffic spikes

Always start with a simple sanity check, i.e., treat any unusual traffic spikes as suspicious. If nothing common (seasonal spikes, holidays, new releases, discounts, etc.) can explain the change in traffic numbers, you should treat a change as suspicious.

In GA4, check Realtime first:

- Open your property and go to Reports → Realtime (left sidebar).

- Look at the Users in the last 30 minutes card, the map, and the Top sources and Top locations tables.

- Ask: Do you see an unexpected country, city, or source suddenly dominating? If yes, make a note of it.

Compare with Traffic acquisition (same day):

- In the left sidebar, go to Reports → Acquisition → Traffic acquisition.

- Set the date range (top-right) to Today (or the same window as Realtime).

- In the table, open the Primary dimension dropdown (top of the table) and switch among:

- Session default channel group.

- Session source/medium.

- Session campaign.

This lets you see the same spike from different angles.

For more details, add quick filters (Comparisons):

- At the top of the report, click Add comparison.

- Choose a Dimension like Country, City, or Source / Medium, then pick the suspicious value you saw in Realtime.

- Click Apply to overlay that segment on the charts and table. If the spike is mostly that source or location, that’s a red flag.

Drill into even more details (extra columns):

- In the Traffic acquisition table, click the small “+” (Add dimension) button next to the current primary dimension on the header row.

- Add secondary details such as Country, Region, City, Device category, Browser, or Language.

- Scan the rows. Signs of artificial activity include:

- One source/medium suddenly taking most traffic with no campaign running.

- A single country/city you don’t usually serve.

- Almost all traffic on one device category (e.g., only mobile).

- The same browser or an uncommon language appearing in bulk.

You can now cross-check patterns:

- Switch the Primary dimension between Session default channel group, Session source/medium, and Session campaign to see if the spike persists across views.

- Keep your comparison active while you switch dimensions to confirm it’s the same cohort causing the spike.

These simple actions should give you plenty of data to analyze and to present to your team to start a discussion. It’s important to distinguish between artificial traffic and real users to keep your SEO performance monitoring clean and accurate.

Key patterns of bot-driven sessions

Funny enough, but the very same merit that is often used by AI proponents to claim their superiority over humans can help SEO practitioners to distinguish patterns of artificial activities and detect bot behavior.

What is that? It’s the fact that bots never get tired, they don’t need coffee breaks, nor vacations; they just work day and night and generate predictable patterns of activity. This “superiority” easily shows in your reports as unusually long spikes, repeated shapes, and smooth lines above the record threshold.

Source: Clickcease

You just need to map the spike against known activities. If nothing matches, examine how the traffic behaves step by step. Your human brain and the knowledge of how humans work are your key advantages in reading patterns of bot-driven sessions.

Make sure to verify the following simple signals:

- Spikes appear at predictable times, then repeat with clocklike precision.

- A new market floods with visits, unrelated to language or shipping regions.

- Goal completions increase, but qualified leads and orders do not.

- Traffic surges without campaigns, launches, or news to explain them.

- One country dominates suddenly, outside your usual audience and markets.

- Engagement times look identical, repeating exact durations across many sessions.

As of the last quarter of 2025, bots are not really very autonomous; they do exactly what they’re told. That discipline creates patterns people don’t naturally produce, and this is exactly why you can spot and identify artificial behavior on your website.

👉 Your job is to remain human and guard what other humans value (e.g., high UX standards, real engagement signals, incentives, etc.).

Using advanced filters and custom segments

Detecting spikes is step one; step two is keeping them out of your core SEO data.

This can be done with the help of advanced filters and custom segments in GA. Essentially, what they do is let you take suspected bot traffic and put it under the microscope.

You can follow these simple steps:

- Go to any Report and select Add comparison. Pick a dimension — say Country or Device category — and choose the odd value. This will split the data so you can see exactly what that segment is doing.

- Then move to the Explore function. Build a new segment with detailed conditions, like “Source/Medium = direct” plus “Engagement time exactly 30 seconds.” Save it, and run an exploration where you compare it against normal traffic.

- For closer analysis, you can create a custom segment. Here you can build rules: for example, sessions from “Browser = Safari 14” with “Dwell time < 5 minutes.”

- Finally, if you want to track it continuously, create an audience under the Admin section. GA4 will maintain it for you, giving ongoing visibility.

With filters, segments, and audiences, you take full control of your data. Your SEO performance indicators stay trustworthy, and suspicious traffic doesn’t cloud your reporting. Simple steps, but enormously big gains for business performance optimization.

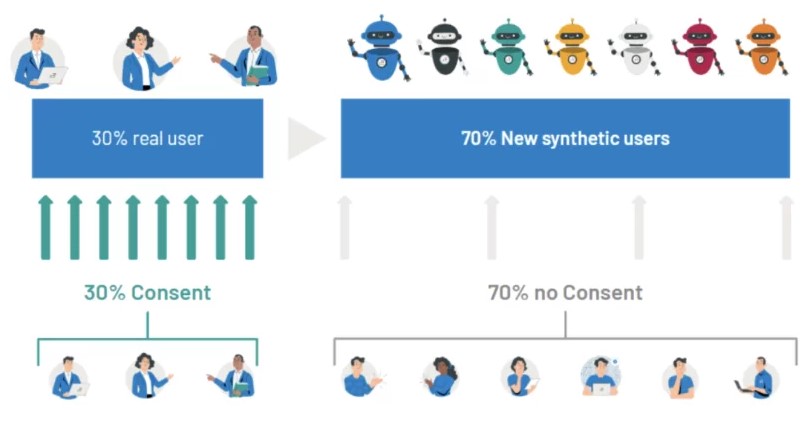

Real alternatives to virtual users

As we have seen, virtual users can be tricky and often indicate unethical and manipulative practices, which can trigger search engine penalties and human rebukes.

The good news is that there are real alternatives to synthetic agents. We are talking about real users here — practices that focus on incentivizing humans to show more activity and helping SEO specialists with testing and driving major SEO KPIs up.

Leveraging genuine user testing platforms

Platforms that source real participants uncover problems robots miss. The technology is simply not at that level yet, when it can match human analytical capabilities, at least not in critical thinking and the ability to generalize.

You get spoken feedback, eye-tracking cues, and authentic confusion. That context guides your SEO and business decision-making and helps make real progress.

By contrast, simulated users are precise, but not curious. Real people try shortcuts, skim headings, and use search in odd ways. They are susceptible to mood fluctuations, may feel depressed, motivated, skeptical, and engaged, and these all show in their actions and feedback. Those authentic behaviors expose design gaps you wouldn’t script.

Curious about such testing platforms examples? Here you go:

- UserTesting – one of the most established platforms for on-demand usability testing with real people.

- Maze – offers remote testing with clickable prototypes and post-test analytics.

- PlaybookUX – combines moderated and unmoderated tests with video feedback.

- Loop11 – lets you run usability tests, A/B comparisons, and task-based studies.

- Optimal Workshop – best known for card sorting and tree testing to improve site navigation.

👉 By weaving these examples in, you can show readers what real alternatives to virtual users look like in practice: services that give you authentic user behavior, spoken feedback, and emotional reactions you’ll never get from fake users or bot traffic.

Using focus groups and beta testers

Focus groups help you hear how customers describe your value. It’s an old-fashioned way of collecting feedback and testing your product/service offerings. However, with the new technologies, focus groups now live and bring results online.

Betas help you see how features behave outside the lab. Together, they make your next test more realistic.

Use this short setup plan:

- Recruit beta testers and organize focus groups by need, role, and familiarity level.

- Share with them a simple script and timeboxed test tasks.

- Capture confusion points and exact quotes (besides fixed/multiple choice answers).

- Ask for one improvement per participant in an open-ended question format.

- Pay attention to the number of similar voices, rather than the loudest ones.

Run a quick beta test after the focus group assessment. Confirm that the most common blockers, problems, and downsides are gone. If not, the next test should target those.

Sometimes, testing requires significant time, e.g., days and weeks. Make sure you set aside enough time and patience.

When you finally close the loop, tell participants what changed. Respect builds a steady pipeline of willing testers and better user insight.

Encouraging authentic engagement through UX

Real engagement happens when answers are easy to find, and the process of finding them is as streamlined as possible. Clean layouts and obvious steps are always better than tricks and shortcuts. For genuine user engagement, stay away from manipulative practices with synthetic users or bot traffic.

Try these small, high-signal changes:

- Write labels that say exactly what happens next.

- Put critical content where scanning eyes actually land.

- Offer presets to reduce setup time for each user.

- Show progress and remaining steps clearly.

- Confirm success and suggest the next action.

Engaged users will be willing to make a discretionary effort on your site, which often translates into meaningful actions (conversions).

👉 And the best part — unlike tricks with synthetic users, real engagement lasts and pays off in the form of client loyalty and advocacy. They will vouch for your brand, product, and services, and recommend them to their friends and families.

The future of synthetic users in SEO

An average person intuitively perceives the concept of bots as something that will be here to stay, not changing much. However, that is the biggest drawback of human linear thinking, which fails to grasp that technology is developing exponentially.

Year after year, digital technologies are getting more powerful, and after a certain threshold, their capabilities (cognitive, intellectual) will explode.

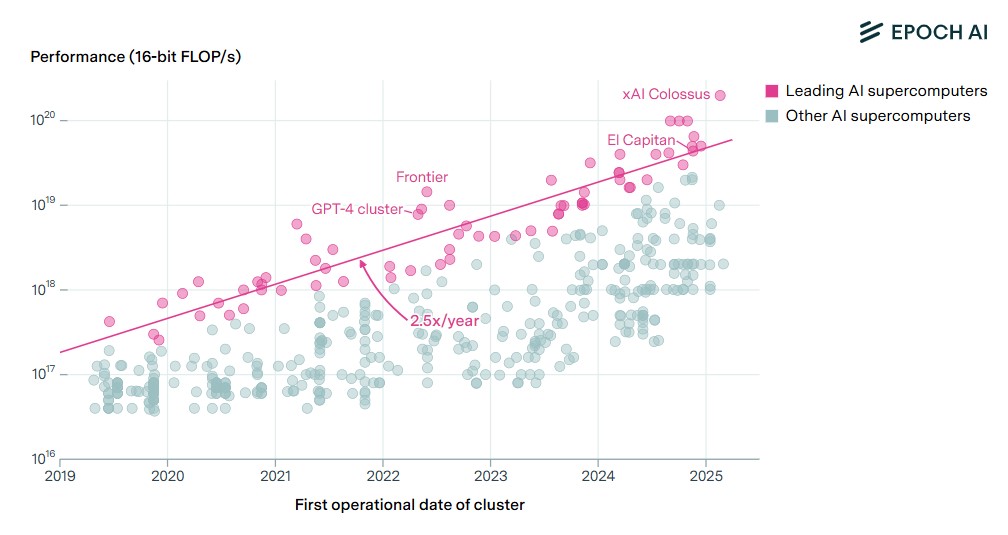

AI gurus and the technological futurologists believe that we are close to achieving the so-called artificial general intelligence (AGI) within the next 2–4 years, i.e., by 2027-2029. It will essentially mean that the intelligence of AI systems will be equal to that of the human brain.

Source: EpochAI

What does it mean for SEO and the role of synthetic users? It means that in a couple of years at most, we’ll be talking not just about bots and scripts, but fully autonomous AI agents with an IQ equal to or higher than that of humans, able to exhibit more complex behavior than humans.

For the first time in human history, we’ll face an artificial cognitive abundance that will be essentially free and available for everyone. Let’s discuss these points at large and ponder over the implications for digital signals and search engines’ ability to spot them.

AI-powered bots and realistic browsing behavior

Virtual users will soon act like hurried shoppers and careful readers. They’ll skim, pause, reopen tabs, and give up when friction piles up. Much of this realism will be tuned by AI.

People will either not participate in this or directly control the bots’ behavior. Some even fear that AI-agents will be vastly smarter than humans and will completely dominate us as a species.

As interfaces get richer, emulated agents will learn timing and tone. They will slow down at decision points and speed through boilerplate. With AI in the loop, the surface looks human.

Within the next year or two, watch out for the following behaviors that will feel surprisingly real:

- Fewer straight-line mouse moves; more curved, human-like paths.

- Short re-reads of headings after mismatched expectations.

- Tabbing to compare similar products, then a firm choice (all within seconds).

- Idle moments after modals and consent prompts appear.

- Abandonment spikes on slow third-party widgets.

- Recovery from form errors that look human, not scripted.

This makes analytics harder to trust at a glance. Without tagging, simulated sessions seep into KPIs. As AI improves, your filters and documentation must improve, too.

👉 So, keep simulations out of your wins. Store their results elsewhere and note their purpose. That discipline will protect you from obscuring the achievement of real, business-driven KPIs.

Will search engines get better at spotting them?

Most likely, yes. Similar to the arms race or the race between AI-made content and AI content detectors, there will be upgrades to search engines’ algorithms that will enable them to see and distinguish bot-like behavior, even if that behavior is as intelligent as a human.

Already today, engines reward durability, not theatrics. They check whether attention becomes loyalty and advocacy. One tidy week won’t outrun a thin six months.

They also widen the lens. Cross-site and time-series checks expose brittle tricks. Practitioner teams use AI to spot clusters that humans miss.

If your improvements help people, they will echo in other data. If not, the lift fades quickly.

From task bots to autonomous AI agents

Today, bots are heavily programmed by humans. They won’t do what they are not told to do. They depend on human instructions and don’t show any significant autonomous behavior, at least beyond their standard program.

However, that will change quickly.

In 2025, we’re already rapidly moving from scripts to planners. By early 2026, agents won’t just run a test; they’ll suggest one, defend it, and revise it. They’ll also monitor competitors and summarize shifts.

👉 Two important notes before specifics: keep humans in the loop, and log everything. You want a clear record of the choices AI made and why.

What will the agents be capable of and responsible for? Likely agent responsibilities will include:

- Propose hypotheses with expected effect sizes.

- Select metrics and thresholds aligned to business goals.

- Execute runs completely autonomously, then pause when anomalies spike.

- Produce digestible summaries with evidence links (that’s already happening).

- Escalate ambiguous results for human judgment.

The increased autonomy of AI agents will likely be warmly welcomed by businesses and governments. Why? Because when done right, this frees teams from manual chores. Done wrong, it scales noise.

The cultural change is real. Analysts become editors of ideas, not gatherers of rows. That’s a healthier balance in busy teams.

However, so far, the secret to success is to keep AI bound and your virtual users out of production KPIs.

Cognitive abundance and its impact on digital signals

Cognition at near-zero cost changes incentives. If anyone can flood charts, charts stop persuading. Going forward, SEO practitioners must prioritize signals with human roots.

👉 Before we jump to the list, one core principle: value relationships over moments. Let AI help measure longevity, not just peaks.

And now let’s ponder over which signals are likely to retain value:

- Cohorts that return after natural time breaks.

- Verified community endorsements and credible backlinks.

- Healthy ratios of support, reviews, and repeat usage.

- Normal device diversity and realistic browsing variance.

- Revenue and retention that map to traffic patterns.

In the age of cognitive abundance, one core SEO principle remains: design for resilience, not spectacle. Build a measurement that rewards the slow, steady proof of interest. In that landscape, synthetic users, no matter how smart they are, fade into the background.

The future can be helpful if we keep it ethical. Clear tagging, fair tests, and outcome-based metrics will carry us forward.

Conclusion

The notion of synthetic users stipulates non-human users, largely bots and scripts. It didn’t come into existence because of some deliberate human action or invention; rather, it was the product of technological progress itself. Once the intelligence of computer systems reached a sufficiently advanced level, the bots' appearance was inevitable.

In SEO, the existence of bots has at least two articulated usage scenarios:

- The first one is manipulative when virtual users are used to simulate human activity and to artificially boost SEO metrics. An experienced SEO analyst can easily distinguish between a genuine human and simulated sessions, though.

- The second is the one used for testing purposes, i.e., when bots are deliberately dispatched to simulate user activities, often at peak load times, to test systems, new approaches, product releases, etc.

These two scenarios represent the unethical and ethical takes on synthetic agents. While some are tempted to use bots for the benefit of their SEO metrics, the real business-related KPIs often remain unreached, and their achievement can be even hampered due to Google’s penalties for using bots.

Even more ambiguous is the future of synthetic users in the SEO context. As the AI systems keep progressing exponentially, in a couple of years, we are likely to see fully autonomous AI agents and systems that are smarter than humans.

The key questions become: How do we distinguish between a genuine human activity and an artificial one? Also, how to ensure we remain in control of these smarter-than-us entities, and will they continue to help achieve our goals, or will they pursue their own?

One practical way to mitigate these risks is to build strict transparency into SEO data pipelines — clearly labeling test traffic, isolating artificial activity, and relying on trusted human feedback loops to keep AI in service of real business goals.

All trademarks, logos, images, and materials are the property of their respective rights holders.

They are used solely for informational, analytical, and review purposes in accordance with applicable copyright law.